One Very Simple Way to Host a Website

I generally enjoy tinkering, and I like to optimise things as much as I can. However, when it came to hosting this website, I found that this habit was actually holding me back. I spent forever deciding which tech stack to use and tried various combinations of things, but I never actually got around to publishing anything. Finally, I decided to just pick something that was simple but that still met all my needs.

Step 1 : Static Site Generator

In the beginning, I was debating whether I should build a dynamic or static site. Since this is just a basic website that is mainly comprised of my blog, I realised that there would be no reason for me to have a dynamic site.

Okay — so there are many static site generators out there. I quickly settled on Hugo. I didn’t benchmark it or anything, but I liked it’s simplicity, syntax and speed. Moreover, I especially liked that it has a built-in mini-webserver that you can use to view a draft version of your website on your local machine. I converted the few posts I’d written in markdown to a basic site and liked what it looked like.

Step 2 : Hosting Provider

I don’t really mind where my website is hosted. The important thing is that the provider is reliable, reasonably priced, and has an easy-to-use interface. I ended up using DigitalOcean — I was familiar with them from small projects in the past, and they offered really good prices for what I needed. Finally, they also met my deal-breaker requirement: supporting IPv6. See my other post about that.

Step 3 : Domain Name Registrar

Nothing special here. I don’t think one is particularly better than the other. I ended up using Porkbun, since they had the best prices for the .tld I wanted to use.

Step 4 : Web Server

I know Apache really well, and I can get around with nginx. But I’m so glad I stumbled upon Caddy. It may not scale as well as something like nginx, but it is perfect for a small website. And it has two killer features:

- It has a very simple config file

- It automatically obtains/renews ssl certs for you

The first one is great and already had me convinced. However, the second one is just crazy! I am quite capable of setting up a bot to get certs from Let’s Encrypt and later renew them. But not having to bother with that and simply letting my webserver do it for me feels futuristic, to say the least.

Step 5 : Web Log Analyser

I was strongly considering Matomo, as it’s a nice open-source alternative to Google Analytics. Before settling though, I decided to look around and see what other options there were. I quite liked GoatCounter as well; but in the end, I settled on GoAccess for two simple reasons: I can install it from a regular Linux package manager and didn’t need to set up a database.

Step 6 : Putting It All Together

Nameservers

After buying your domain name, point the authoritative nameservers on your domain name registrar’s website to DigitalOcean’s nameservers: ns1.digitalocean.com, ns2.digitalocean.com, and ns3.digitalocean.com.

Create a DigitalOcean droplet

I selected the following options:

- Latest Fedora Linux distro

- Basic plan

- Regular Intel CPU with SSD

- $5/month option

- Datacenter location doesn’t matter. Probably choose the one that’s geographically located closest to you or your intended audience.

- I ticked the IPv6 and Monitoring options (Both are free)

- I selected ssh login instead of password login.

Finally, I added my ssh key and assigned the droplet to my default project.

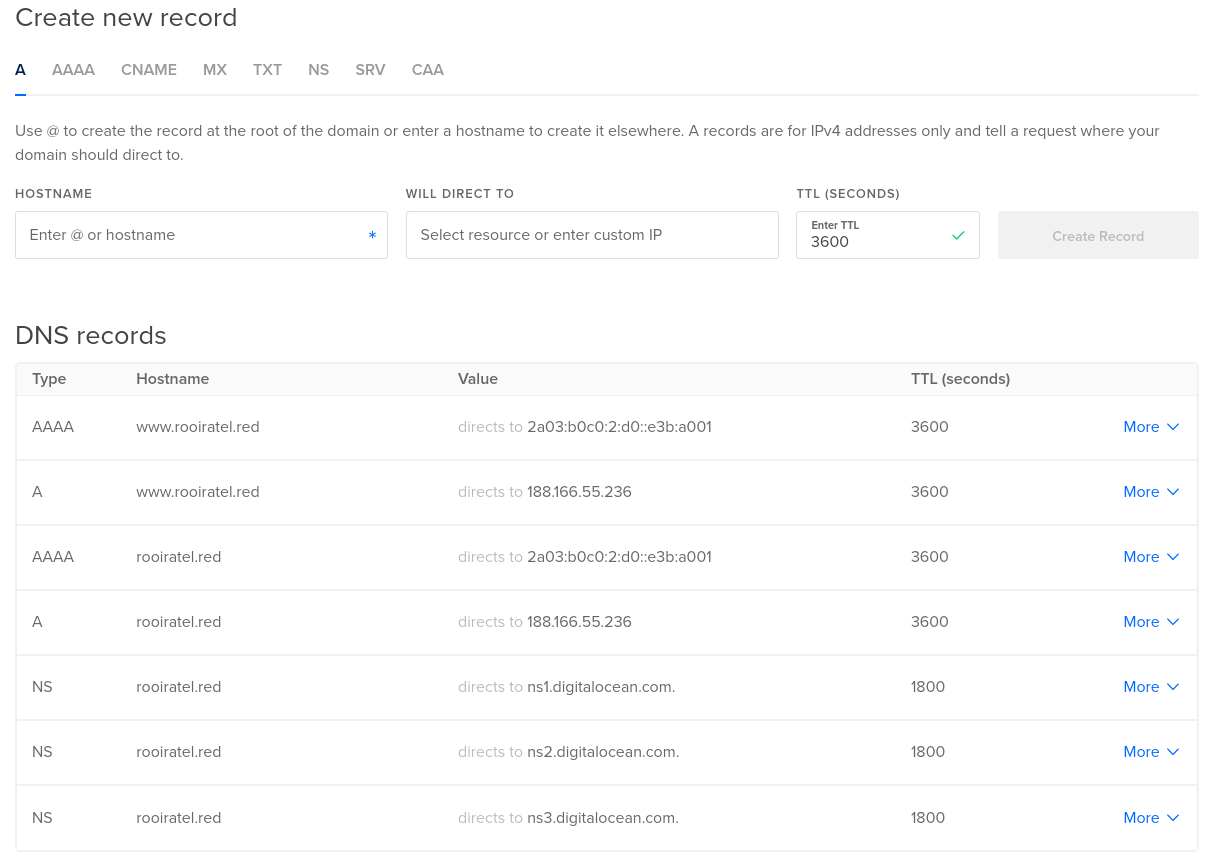

DNS Records

Once the droplet was created, I went to the “Domains” setting for it and added the A, AAAA and NS records. See below:

Server setup

ssh into your server and do a quick dnf update to get the system up to date. Then install the packages below:

goacesscaddy

Caddy setup

Create a Caddy file. You can store it anywhere, but typically it is located at /etc/caddy/Caddyfile

Mine looks something like this:

www.example.com {

redir https://example.com{uri}

}

example.com {

root * /var/www/html

encode zstd gzip

file_server

# 404 path below must be relative to the root dir difined below

handle_errors {

@404 {

expression {http.error.status_code} == 404

}

handle @404 {

rewrite * 404.html

file_server

}

}

header {

Strict-Transport-Security "max-age=31536000; includeSubDomains; preload"

X-Xss-Protection "1; mode=block"

X-Content-Type-Options "nosniff"

X-Frame-Options "DENY"

Content-Security-Policy "upgrade-insecure-requests"

Referrer-Policy "strict-origin-when-cross-origin"

Cache-Control "public, max-age=31557600, must-revalidate"

}

log {

output file /var/log/caddy_access.log {

# roll_disabled

roll_size 5000MiB

# roll_keep <num>

# roll_keep_for <days>

}

}

}

The first section redirects the pointless www subdomain to the correct URL. The second section points to the root directory where your website content will be stored and enables compression. The handle_errors subsection redirects you to the 404.html page if there is an HTTP 404 response. In the header subsection, I enabled some security headers. Finally, in the log subsection, I told Caddy where to store its log file, and I set the log rotation settings.

Now, create a systemd service for running Caddy and enable it. Save it at /etc/systemd/system/caddy.service

Mine looks something like this:

[Unit]

Description=Caddy web server

Documentation=https://caddyserver.com/docs

After=network.target

[Service]

User=root

LimitNOFILE=8192

Environment=STNORESTART=yes

ExecStart=/usr/bin/caddy run -config /etc/caddy/Caddyfile

Restart=on-failure

[Install]

WantedBy=multi-user.target

GoAccess setup

Run the following command to generate a report from your Caddy logs : goaccess /var/log/caddy_access.log -o /var/www/html/stats.html --log-format=CADDY --real-time-html --daemonize

If you browse to https://example.com/stats.html, you can read the nicely formatted report that GoAccess generated, detailing things like most visited pages, number of visitors, geolocation of visitors, TLS version etc.

You can also tweak these settings (log files, output file, log format etc. and much more) in the goaccess.conf file that is normally located at /etc/goaccess/goacces.conf, but I’m happy with the default settings.

Actually put your content online

Once you are happy with how your website is looking on your local machine (using Hugo to preview it), execute the hugo command, and it will generate the final HTML output in the public subdirectory.

Now, you can rsync the output onto your server. And just like that, your website is live!

rsync --progress -avzP -e "ssh -i ~/.ssh/your_ssh_key" /path/to/hugo/root/dir/public/ root@example.com:/var/www/html

Conclusion

It was a long explanation, but I got this all up and running in, like, 20 minutes. It’s really fast and simple. And I’ll probably be using this setup for a long time thanks to the very low maintenance requirements.